Overview

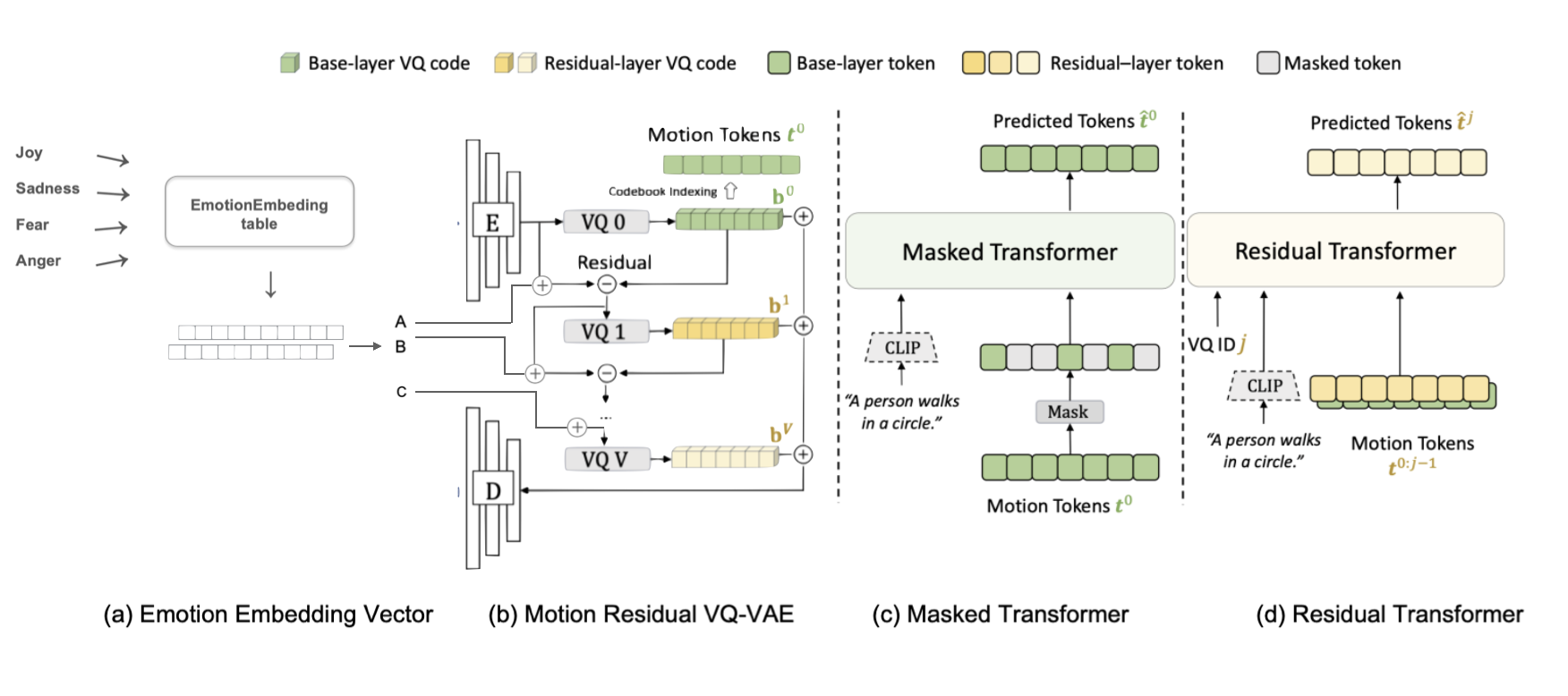

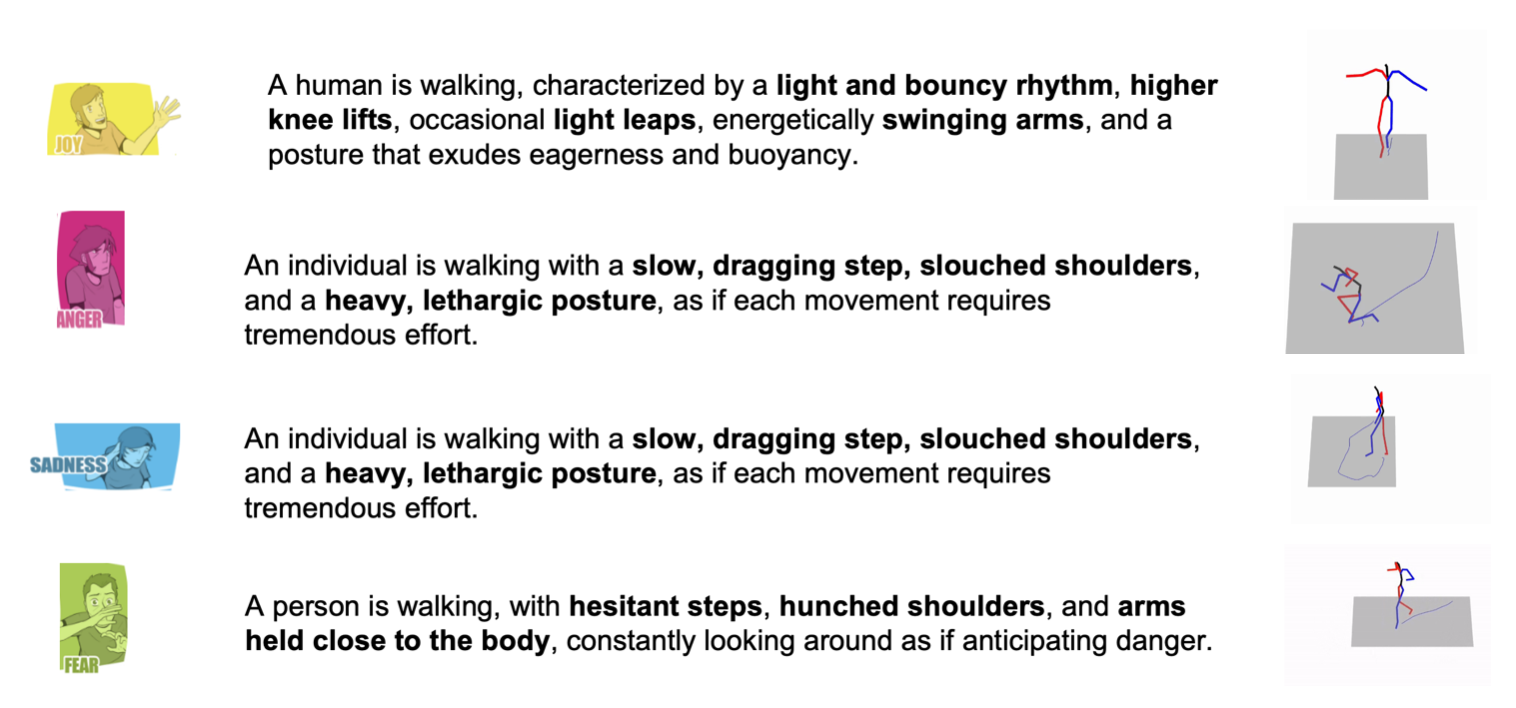

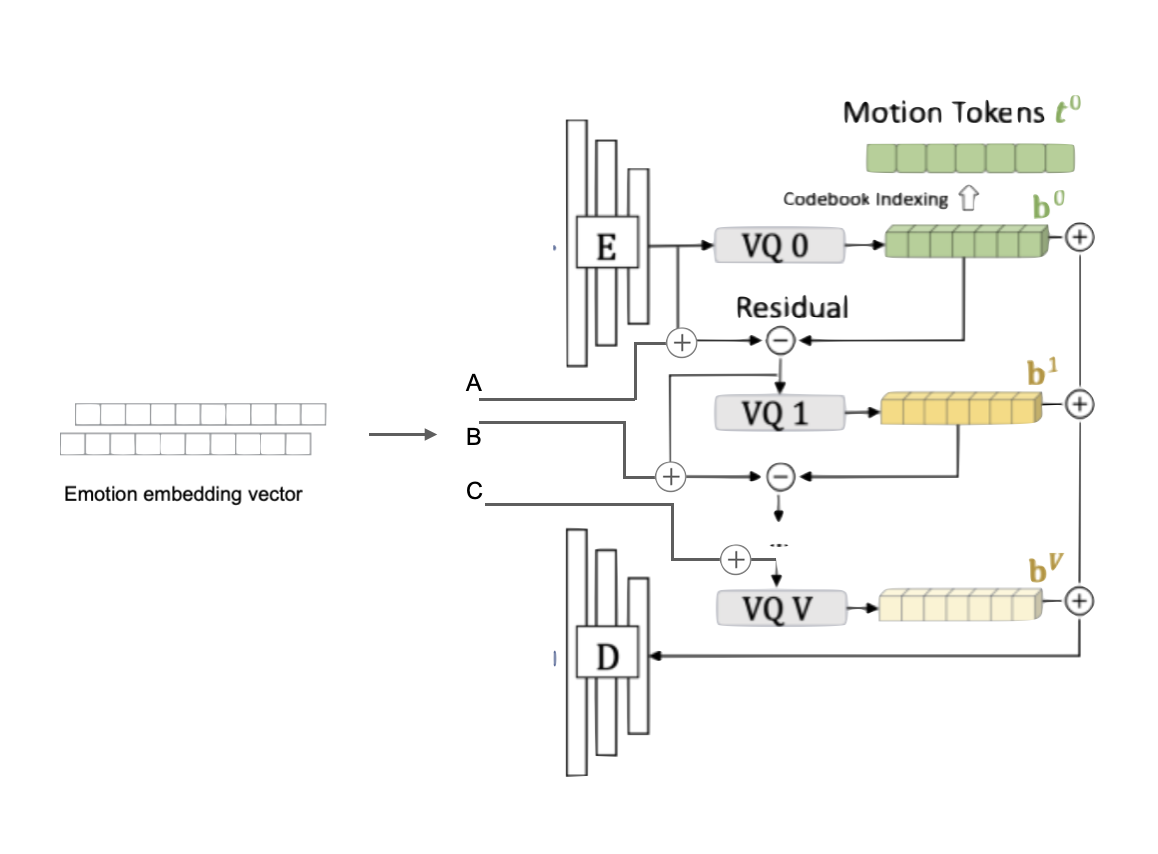

Our approach combines emotion understanding with motion generation using the EmotionEmbedder module.

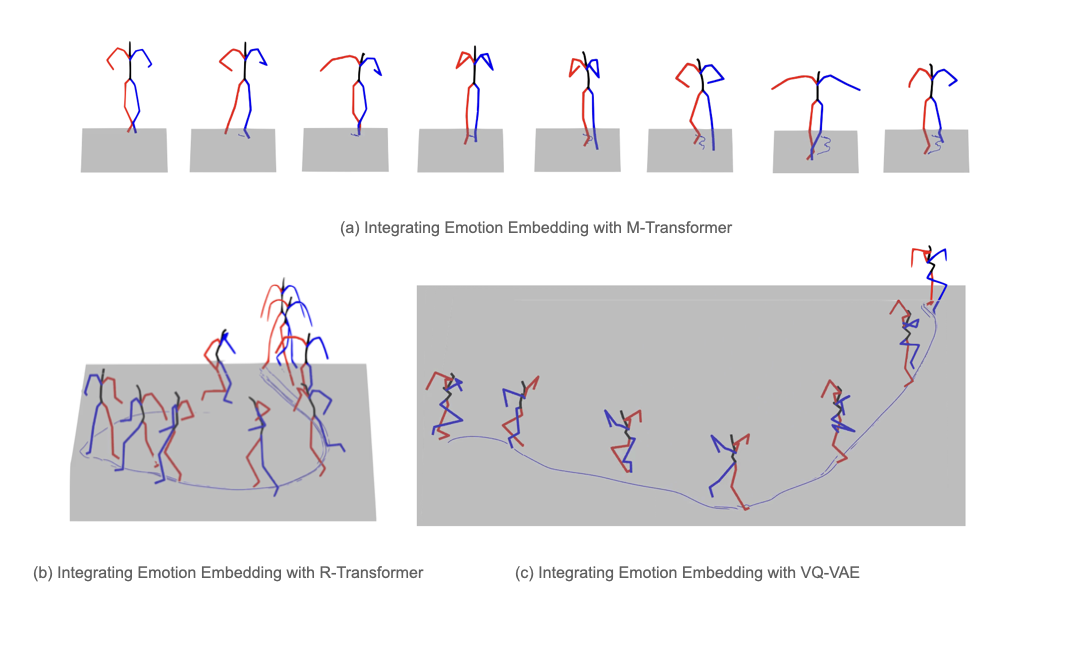

This project extends the motion generation model MoMask by adding emotion understanding capabilities. We built an EmotionEmbedder module that helps guide the motion generation process with emotional context. Through experiments, we found that adding emotion information at the M-transformer input works best. We also explored different ways to train the model and identified some challenges, like the limitations of using mean squared error for comparing motions.

* This video contains audio

Our approach combines emotion understanding with motion generation using the EmotionEmbedder module.

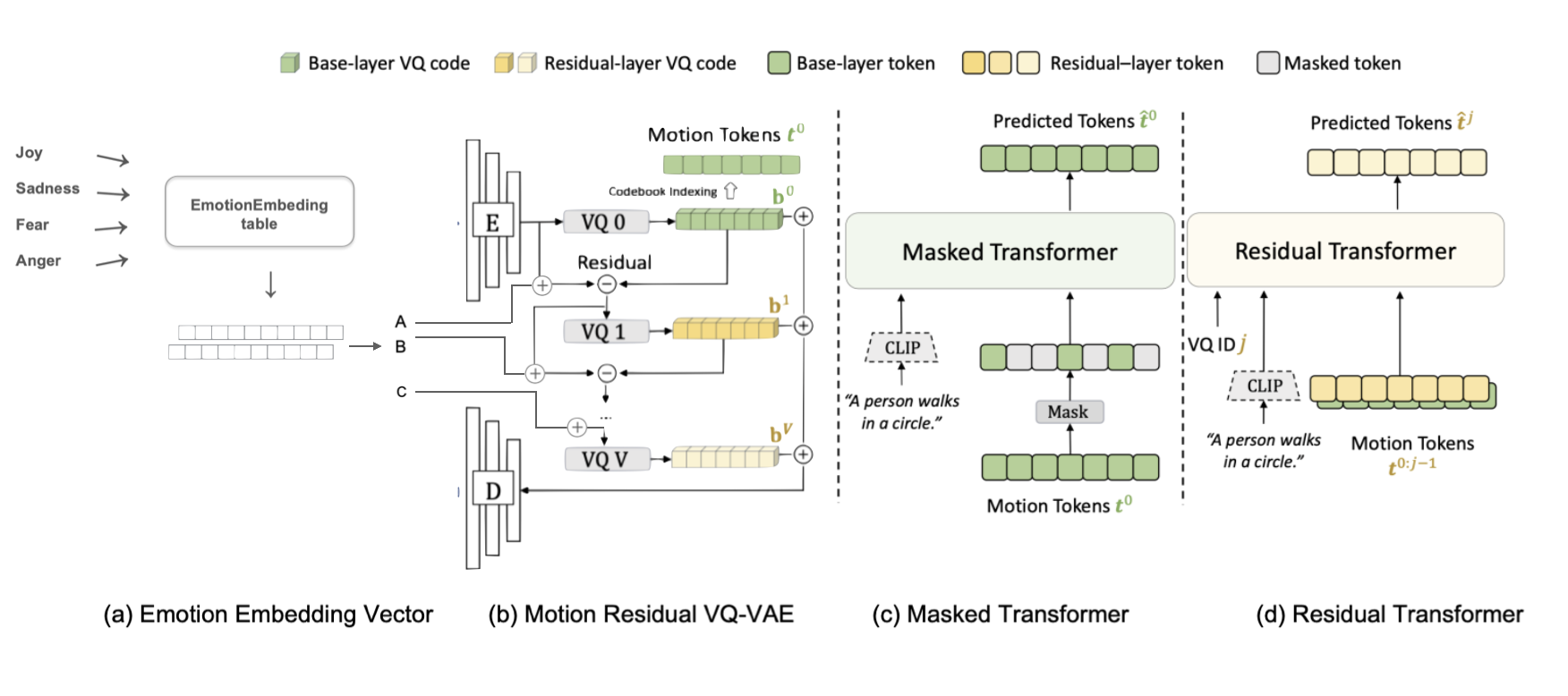

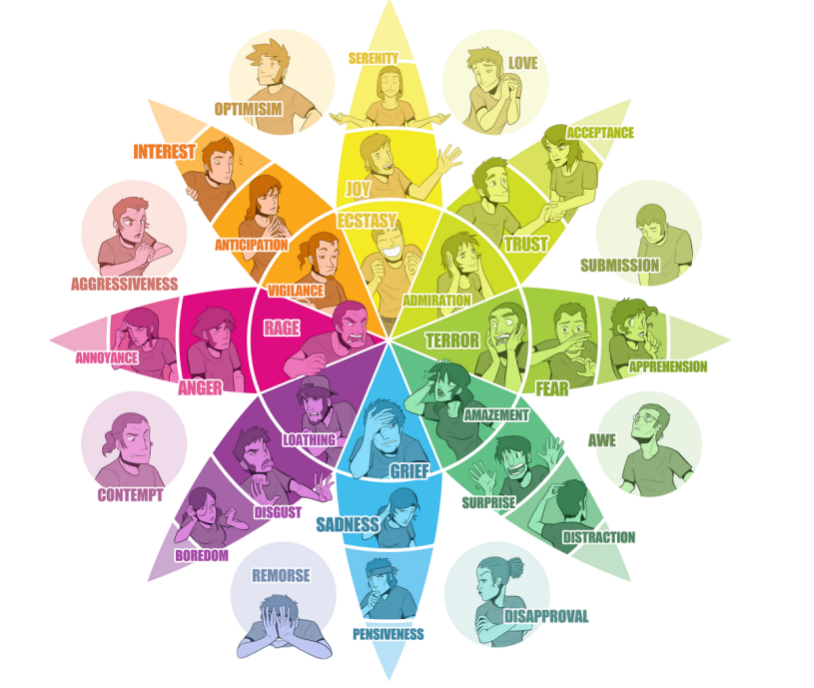

We focused on four basic emotions from Plutchik's wheel: Joy, Sadness, Fear, and Anger. The EmotionEmbedder converts these emotions into vectors that can guide motion generation.

Comparing different integration points: M-transformer worked best, producing more stable and emotionally expressive motions.